Sunday 5 May 2024

Saturday 4 May 2024

Data Science: The Hard Parts: Techniques for Excelling at Data Science

Python Coding May 04, 2024 Books, Data Science No comments

This practical guide provides a collection of techniques and best practices that are generally overlooked in most data engineering and data science pedagogy. A common misconception is that great data scientists are experts in the "big themes" of the discipline—machine learning and programming. But most of the time, these tools can only take us so far. In practice, the smaller tools and skills really separate a great data scientist from a not-so-great one.

Taken as a whole, the lessons in this book make the difference between an average data scientist candidate and a qualified data scientist working in the field. Author Daniel Vaughan has collected, extended, and used these skills to create value and train data scientists from different companies and industries.

With this book, you will:

Understand how data science creates value

Deliver compelling narratives to sell your data science project

Build a business case using unit economics principles

Create new features for a ML model using storytelling

Learn how to decompose KPIs

Perform growth decompositions to find root causes for changes in a metric

Daniel Vaughan is head of data at Clip, the leading paytech company in Mexico. He's the author of Analytical Skills for AI and Data Science (O'Reilly).

PDF: Data Science: The Hard Parts: Techniques for Excelling at Data Science

Hard Copy: Data Science: The Hard Parts: Techniques for Excelling at Data Science

Streamgraphs using Python

Python Coding May 04, 2024 Data Science No comments

Code:

import matplotlib.pyplot as plt

import numpy as np

x = np.linspace(0, 10, 100)

y1 = np.sin(x)

y2 = np.cos(x)

plt.stackplot(x, y1, y2, baseline='wiggle')

plt.title('Streamgraph')

plt.show()

Explanation:

Statistical Inference and Probability

Python Coding May 04, 2024 Books, Data Science No comments

An experienced author in the field of data analytics and statistics, John Macinnes has produced a straight-forward text that breaks down the complex topic of inferential statistics with accessible language and detailed examples. It covers a range of topics, including:

· Probability and Sampling distributions

· Inference and regression

· Power, effect size and inverse probability

Part of The SAGE Quantitative Research Kit, this book will give you the know-how and confidence needed to succeed on your quantitative research journey.

Hard Copy: Statistical Inference and Probability

PDF: Statistical Inference and Probability (The SAGE Quantitative Research Kit)

Friday 26 April 2024

Top 4 free Mathematics course for Data Science !

Python Coding April 26, 2024 Data Science No comments

In the age of big data, understanding statistics and data science concepts is becoming increasingly crucial across various industries. From finance to healthcare, businesses are leveraging data-driven insights to make informed decisions and gain a competitive edge. In this blog post, we'll embark on a journey through fundamental statistical concepts, explore the powerful technique of K-Means Clustering in Python, delve into the realm of probability, and demystify practical time series analysis.

In our tutorial, we'll walk through the implementation of K-Means clustering using Python, focusing on the following steps:

Understanding the intuition behind K-Means clustering.Preprocessing the data and feature scaling.Choosing the optimal number of clusters using techniques like the Elbow Method or Silhouette Score.Implementing K-Means clustering using scikit-learn.Visualizing the clustering results to gain insights into the underlying structure of the data.

Probability theory is the mathematical framework for analyzing random phenomena and quantifying uncertainty. Whether you're predicting the outcome of a coin toss or estimating the likelihood of a stock market event, probability theory provides the tools to make informed decisions in the face of uncertainty.

In this section, we'll provide an intuitive introduction to probability, covering essential concepts such as:

Basic probability terminology: events, sample space, and outcomes.Probability axioms and rules: addition rule, multiplication rule, and conditional probability.Probability distributions: discrete and continuous distributions.Common probability distributions: Bernoulli, binomial, normal, and Poisson distributions.Applications of probability theory in real-world scenarios.

Practical Time Series Analysis

Python Coding April 26, 2024 Course, Coursera, Data Science No comments

There are 6 modules in this course

Welcome to Practical Time Series Analysis!

Many of us are "accidental" data analysts. We trained in the sciences, business, or engineering and then found ourselves confronted with data for which we have no formal analytic training. This course is designed for people with some technical competencies who would like more than a "cookbook" approach, but who still need to concentrate on the routine sorts of presentation and analysis that deepen the understanding of our professional topics.

In practical Time Series Analysis we look at data sets that represent sequential information, such as stock prices, annual rainfall, sunspot activity, the price of agricultural products, and more. We look at several mathematical models that might be used to describe the processes which generate these types of data. We also look at graphical representations that provide insights into our data. Finally, we also learn how to make forecasts that say intelligent things about what we might expect in the future.

Please take a few minutes to explore the course site. You will find video lectures with supporting written materials as well as quizzes to help emphasize important points. The language for the course is R, a free implementation of the S language. It is a professional environment and fairly easy to learn.

You can discuss material from the course with your fellow learners. Please take a moment to introduce yourself!

Join Free: Practical Time Series Analysis

Time Series Analysis can take effort to learn- we have tried to present those ideas that are "mission critical" in a way where you understand enough of the math to fell satisfied while also being immediately productive. We hope you enjoy the class!

Thursday 18 April 2024

Meta Data Analyst Professional Certificate

Python Coding April 18, 2024 Data Science No comments

Why Take a Meta Data Analyst Professional Certificate?

Collect, clean, sort, evaluate, and visualize data

Apply the Obtain, Sort, Explore, Model, Interpret (OSEMN) framework to guide the data analysis process

Learn to use statistical analysis, including hypothesis testing, regression analysis, and more, to make data-driven decisions

Develop an understanding of the foundational principles underpinning effective data management and usability of data assets within organizational context

Aquire the confidence to add the following skills to add to your resume:

Data analysis

Python Programming

Statistics

Data management

Data-driven decision making

Data visualization

Linear Regression

Hypothesis testing

Data Management

Tableau

Join Free: Meta Data Analyst Professional Certificate

What you'll learn

Collect, clean, sort, evaluate, and visualize data

Apply the OSEMN, framework to guide the data analysis process, ensuring a comprehensive and structured approach to deriving actionable insights

Use statistical analysis, including hypothesis testing, regression analysis, and more, to make data-driven decisions

Develop an understanding of the foundational principles of effective data management and usability of data assets within organizational context

Professional Certificate - 5 course series

Prepare for a career in the high-growth field of data analytics. In this program, you’ll build in-demand technical skills like Python, Statistics, and SQL in spreadsheets to get job-ready in 5 months or less, no prior experience needed.

Data analysis involves collecting, processing, and analyzing data to extract insights that can inform decision-making and strategy across an organization.

In this program, you’ll learn basic data analysis principles, how data informs decisions, and how to apply the OSEMN framework to approach common analytics questions. You’ll also learn how to use essential tools like SQL, Python, and Tableau to collect, connect, visualize, and analyze relevant data.

You’ll learn how to apply common statistical methods to writing hypotheses through project scenarios to gain practical experience with designing experiments and analyzing results.

When you complete this full program, you’ll have a portfolio of hands-on projects and a Professional Certificate from Meta to showcase your expertise.

Applied Learning Project

Throughout the program, you’ll get to practice your new data analysis skills through hands-on projects including:

Identifying data sources

Using spreadsheets to clean and filter data

Using Python to sort and explore data

Using Tableau to visualize results

Using statistical analyses

By the end, you’ll have a professional portfolio that you can show to prospective employers or utilize for your own business.

Tuesday 16 April 2024

do you know difference between Data Analyst , Data Scientist and Data Engineer?

Python Coding April 16, 2024 Data Science No comments

Data Analyst

A data analyst sits between business intelligence and data science. They provide vital information to business stakeholders.

Data Management in SQL (PostgreSQL)

Data Analysis in SQL (PostgreSQL)

Exploratory Analysis Theory

Statistical Experimentation Theory

Free Certification : Data Analyst Certification

Data Scientist Associate

A data scientist is a professional responsible for collecting, analyzing and interpreting extremely large amounts of data.

R / Python Programming

Data Manipulation in R/Python

1.1 Calculate metrics to effectively report characteristics of data and relationships between

features

● Calculate measures of center (e.g. mean, median, mode) for variables using R or Python.

● Calculate measures of spread (e.g. range, standard deviation, variance) for variables

using R or Python.

● Calculate skewness for variables using R or Python.

● Calculate missingness for variables and explain its influence on reporting characteristics

of data and relationships in R or Python.

● Calculate the correlation between variables using R or Python.

1.2 Create data visualizations in coding language to demonstrate the characteristics of data

● Create and customize bar charts using R or Python.

● Create and customize box plots using R or Python.

● Create and customize line graphs using R or Python.

● Create and customize histograms graph using R or Python.

1.3 Create data visualizations in coding language to represent the relationships between

features

● Create and customize scatterplots using R or Python.

● Create and customize heatmaps using R or Python.

● Create and customize pivot tables using R or Python.

1.4 Identify and reduce the impact of characteristics of data

● Identify when imputation methods should be used and implement them to reduce the

impact of missing data on analysis or modeling using R or Python.

● Describe when a transformation to a variable is required and implement corresponding

transformations using R or Python.

● Describe the differences between types of missingness and identify relevant approaches

to handling types of missingness.

● Identify and handle outliers using R or Python.

Statistical Fundamentals in R/Python

2.1 Perform standard data import, joining and aggregation tasks

● Import data from flat files into R or Python.

● Import data from databases into R or Python

● Aggregate numeric, categorical variables and dates by groups using R or Python.

● Combine multiple tables by rows or columns using R or Python.

● Filter data based on different criteria using R or Python.

2.2 Perform standard cleaning tasks to prepare data for analysis

● Match strings in a dataset with specific patterns using R or Python.

● Convert values between data types in R or Python.

● Clean categorical and text data by manipulating strings in R or Python.

● Clean date and time data in R or Python.

2.3 Assess data quality and perform validation tasks

● Identify and replace missing values using R or Python.

● Perform different types of data validation tasks (e.g. consistency, constraints, range

validation, uniqueness) using R or Python.

● Identify and validate data types in a data set using R or Python.

2.4 Collect data from non-standard formats by modifying existing code

● Adapt provided code to import data from an API using R or Python.

● Identify the structure of HTML and JSON data and parse them into a usable format for

data processing and analysis using R or Python

Importing & Cleaning in R/Python

Machine Learning Fundamentals in R/Python

Free Certification : Data Science

Data Engineer

A data engineer collects, stores, and pre-processes data for easy access and use within an organization. Associate certification is available.

Data Management in SQL (PostgreSQL)

Exploratory Analysis Theory

Free Certification : Data Science

Sunday 14 April 2024

4 Free books to master Data Analytics

Python Coding April 14, 2024 Data Science No comments

Storytelling with Data: A Data Visualization Guide for Business Professionals

Don't simply show your data - tell a story with it!

Storytelling with Data teaches you the fundamentals of data visualization and how to communicate effectively with data. You'll discover the power of storytelling and the way to make data a pivotal point in your story. The lessons in this illuminative text are grounded in theory but made accessible through numerous real-world examples - ready for immediate application to your next graph or presentation.

Storytelling is not an inherent skill, especially when it comes to data visualization, and the tools at our disposal don't make it any easier. This book demonstrates how to go beyond conventional tools to reach the root of your data and how to use your data to create an engaging, informative, compelling story. Specifically, you'll learn how to:

Understand the importance of context and audience

Determine the appropriate type of graph for your situation

Recognize and eliminate the clutter clouding your information

Direct your audience's attention to the most important parts of your data

Think like a designer and utilize concepts of design in data visualization

Leverage the power of storytelling to help your message resonate with your audience

Together, the lessons in this book will help you turn your data into high-impact visual stories that stick with your audience. Rid your world of ineffective graphs, one exploding 3D pie chart at a time. There is a story in your data - Storytelling with Data will give you the skills and power to tell it!

Fundamentals of Data Analytics: Learn Essential Skills, Embrace the Future, and Catapult Your Career in the Data-Driven World—A Comprehensive Guide to Data Literacy for Beginners

Gain a competitive edge in today’s data-driven world and build a rich career as a data professional that drives business success and innovation…

Today, data is everywhere… and it has become the essential building block of this modern society.

And that’s why now is the perfect time to pursue a career in data.

But what does it take to become a competent data professional?

This book is your ultimate guide to understanding the fundamentals of data analytics, helping you unlock the expertise of efficiently solving real-world data-related problems.

Here is just a fraction of what you will discover:

A beginner-friendly 5-step framework to kickstart your journey into analyzing and processing data

How to get started with the fundamental concepts, theories, and models for accurately analyzing data

Everything you ever needed to know about data mining and machine learning principles

Why business run on a data-driven culture, and how you can leverage it using real-time business intelligence analytics

Strategies and techniques to build a problem-solving mindset that can overcome any complex and unique dataset

How to create compelling and dynamic visualizations that help generate insights and make data-driven decisions

The 4 pillars of a new digital world that will transform the landscape of analyzing data

And much more.

Believe it or not, you can be terrible in math or statistics and still pursue a career in data.

And this book is here to guide you throughout this journey, so that crunching data becomes second nature to you.

Ready to master the fundamentals and build a successful career in data analytics? Click the “Add to Cart” button right now.

PLEASE NOTE: When you purchase this title, the accompanying PDF will be available in your Audible Library along with the audio.

Data Analytics for Absolute Beginners: A Deconstructed Guide to Data Literacy: Python for Data Science, Book 2

Data Analytics, Data Visualization & Communicating Data: 3 books in 1: Learn the Processes of Data Analytics and Data Science, Create Engaging Data Visualizations, and Present Data Effectively

Harvard Business Review called data science “the sexiest job of the 21st century,” so it's no surprise that data science jobs have grown up to 20 times in the last three years. With demand outpacing supply, companies are willing to pay top dollar for talented data professionals. However, to stand out in one of these positions, having foundational knowledge of interpreting data is essential. You can be a spreadsheet guru, but without the ability to turn raw data into valuable insights, the data will render useless. That leads us to data analytics and visualization, the ability to examine data sets, draw meaningful conclusions and trends, and present those findings to the decision-maker effectively.

Mastering this skill will undoubtedly lead to better and faster business decisions. The three audiobooks in this series will cover the foundational knowledge of data analytics, data visualization, and presenting data, so you can master this essential skill in no time. This series includes:

Everything data analytics: a beginner's guide to data literacy and understanding the processes that turns data into insights.

Beginner's guide to data visualization: how to understand, design, and optimize over 40 different charts.

How to win with your data visualizations: the five part guide for junior analysts to create effective data visualizations and engaging data stories.

These three audiobooks cover an extensive amount of information, such as:

Overview of the data collection, management, and storage processes.

Fundamentals of cleaning data.

Essential machine learning algorithms required for analysis such as regression, clustering, classification, and more....

The fundamentals of data visualization.

An in-depth view of over 40 plus charts and when to use them.

A comprehensive data visualization design guide.

Walkthrough on how to present data effectively.

And so much more!

Tuesday 2 April 2024

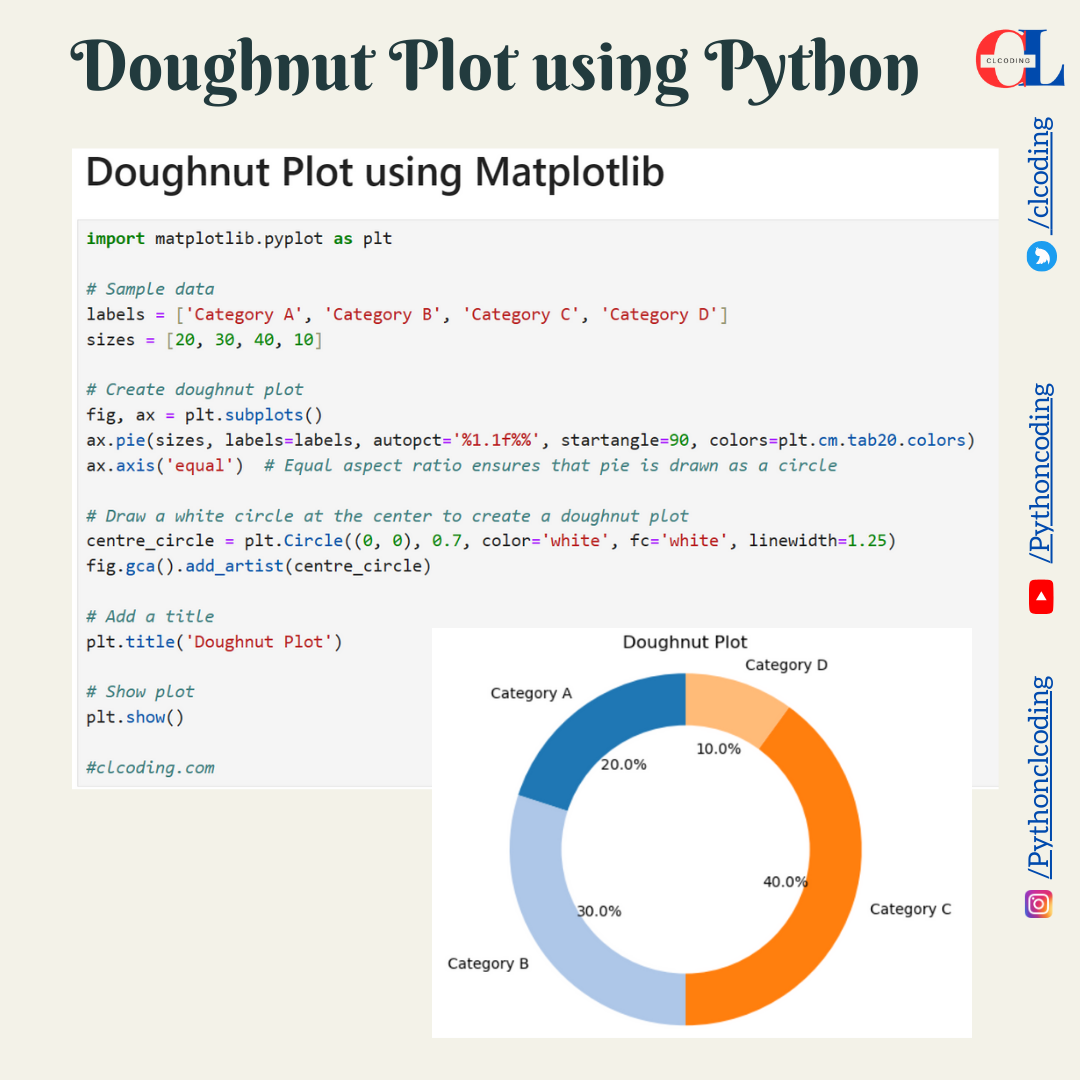

Doughnut Plot using Python

Python Coding April 02, 2024 Data Science, Python No comments

import plotly.graph_objects as go

# Sample data

labels = ['A', 'B', 'C', 'D']

values = [20, 30, 40, 10]

colors = ['#FFA07A', '#FFD700', '#6495ED', '#ADFF2F']

# Create doughnut plot

fig = go.Figure(data=[go.Pie(labels=labels, values=values, hole=.5, marker=dict(colors=colors))])

fig.update_traces(textinfo='percent+label', textfont_size=14, hoverinfo='label+percent')

fig.update_layout(title_text="Customized Doughnut Plot", showlegend=False)

# Show plot

fig.show()

#clcoding.com

import matplotlib.pyplot as plt

# Sample data

labels = ['Category A', 'Category B', 'Category C', 'Category D']

sizes = [20, 30, 40, 10]

explode = (0, 0.1, 0, 0) # "explode" the 2nd slice

# Create doughnut plot

fig, ax = plt.subplots()

ax.pie(sizes, explode=explode, labels=labels, autopct='%1.1f%%', startangle=90, shadow=True, colors=plt.cm.tab20.colors)

ax.axis('equal') # Equal aspect ratio ensures that pie is drawn as a circle

# Draw a white circle at the center to create a doughnut plot

centre_circle = plt.Circle((0, 0), 0.7, color='white', fc='white', linewidth=1.25)

fig.gca().add_artist(centre_circle)

# Add a title

plt.title('Doughnut Plot with Exploded Segment and Shadow Effect')

# Show plot

plt.show()

#clcoding.com

import plotly.graph_objects as go

# Sample data

labels = ['A', 'B', 'C', 'D']

values = [20, 30, 40, 10]

# Create doughnut plot

fig = go.Figure(data=[go.Pie(labels=labels, values=values, hole=.5)])

fig.update_layout(title_text="Doughnut Plot")

# Show plot

fig.show()

#clcoding.com

import matplotlib.pyplot as plt

# Sample data

labels = ['Category A', 'Category B', 'Category C', 'Category D']

sizes = [20, 30, 40, 10]

# Create doughnut plot

fig, ax = plt.subplots()

ax.pie(sizes, labels=labels, autopct='%1.1f%%', startangle=90, colors=plt.cm.tab20.colors)

ax.axis('equal') # Equal aspect ratio ensures that pie is drawn as a circle

# Draw a white circle at the center to create a doughnut plot

centre_circle = plt.Circle((0, 0), 0.7, color='white', fc='white', linewidth=1.25)

fig.gca().add_artist(centre_circle)

# Add a title

plt.title('Doughnut Plot')

# Show plot

plt.show()

#clcoding.com

Friday 8 March 2024

Fractal Data Science Professional Certificate

Python Coding March 08, 2024 Coursera, Data Science No comments

What you'll learn

Apply structured problem-solving techniques to dissect and address complex data-related challenges encountered in real-world scenarios.

Utilize SQL proficiency to retrieve, manipulate data and employ data visualization skills using Power BI to communicate insights.

Apply Python expertise for data manipulation, analysis and implement machine learning algorithms to create predictive models for applications.

Create compelling data stories to influence your audience and master the art of critically analyzing data while making decisions and recommendations.

Join Free: Fractal Data Science Professional Certificate

Professional Certificate - 8 course series

CertNexus Certified Data Science Practitioner Professional Certificate

Python Coding March 08, 2024 Coursera, Data Science No comments

Advance your career with in-demand skills

Receive professional-level training from CertNexus

Demonstrate your technical proficiency

Earn an employer-recognized certificate from CertNexus

Prepare for an industry certification exam

Join Free: CertNexus Certified Data Science Practitioner Professional Certificate

Professional Certificate - 5 course series

IBM Data Engineering Professional Certificate

Python Coding March 08, 2024 Coursera, Data Science, IBM No comments

What you'll learn

Master the most up-to-date practical skills and knowledge data engineers use in their daily roles

Learn to create, design, & manage relational databases & apply database administration (DBA) concepts to RDBMSs such as MySQL, PostgreSQL, & IBM Db2

Develop working knowledge of NoSQL & Big Data using MongoDB, Cassandra, Cloudant, Hadoop, Apache Spark, Spark SQL, Spark ML, and Spark Streaming

Implement ETL & Data Pipelines with Bash, Airflow & Kafka; architect, populate, deploy Data Warehouses; create BI reports & interactive dashboards

Join Free: IBM Data Engineering Professional Certificate

Professional Certificate - 13 course series

Preparing for Google Cloud Certification: Cloud Data Engineer Professional Certificate

Python Coding March 08, 2024 Books, Data Science, Google No comments

What you'll learn

Identify the purpose and value of the key Big Data and Machine Learning products in Google Cloud.

Employ BigQuery to carry out interactive data analysis.

Use Cloud SQL and Dataproc to migrate existing MySQL and Hadoop/Pig/Spark/Hive workloads to Google Cloud.

Choose between different data processing products on Google Cloud.

Join Free: Preparing for Google Cloud Certification: Cloud Data Engineer Professional Certificate

Professional Certificate - 6 course series

Tableau Business Intelligence Analyst Professional Certificate

Python Coding March 08, 2024 Books, Data Science No comments

What you'll learn

Gain the essential skills necessary to excel in an entry-level Business Intelligence Analytics role.

Learn to use Tableau Public to manipulate and prepare data for analysis.

Craft and dissect data visualizations that reveal patterns and drive actionable insights.

Construct captivating narratives through data, enabling stakeholders to explore insights effectively.

Join Free: Tableau Business Intelligence Analyst Professional Certificate

Professional Certificate - 8 course series

Wednesday 6 March 2024

Data Analysis and Visualization Foundations Specialization

Python Coding March 06, 2024 Coursera, Data Science, IBM No comments

What you'll learn

Describe the data ecosystem, tasks a Data Analyst performs, as well as skills and tools required for successful data analysis

Explain basic functionality of spreadsheets and utilize Excel to perform a variety of data analysis tasks like data wrangling and data mining

List various types of charts and plots and create them in Excel as well as work with Cognos Analytics to generate interactive dashboards

Join Free: Data Analysis and Visualization Foundations Specialization

Specialization - 4 course series

IBM AI Foundations for Business Specialization

Python Coding March 06, 2024 AI, Data Science, IBM No comments

Advance your subject-matter expertise

Learn in-demand skills from university and industry experts

Master a subject or tool with hands-on projects

Develop a deep understanding of key concepts

Earn a career certificate from IBM

Join Free: IBM AI Foundations for Business Specialization

Specialization - 3 course series

IBM & Darden Digital Strategy Specialization

Python Coding March 06, 2024 Data Science, IBM No comments

What you'll learn

Understand the value of data and how the rapid growth of technologies such as artificial intelligence and cloud computing are transforming business.

Join Free: IBM & Darden Digital Strategy Specialization

Specialization - 6 course series

Data Science Fundamentals with Python and SQL Specialization

Python Coding March 06, 2024 Data Science, IBM, Python, SQL No comments

What you'll learn

Working knowledge of Data Science Tools such as Jupyter Notebooks, R Studio, GitHub, Watson Studio

Python programming basics including data structures, logic, working with files, invoking APIs, and libraries such as Pandas and Numpy

Statistical Analysis techniques including Descriptive Statistics, Data Visualization, Probability Distribution, Hypothesis Testing and Regression

Relational Database fundamentals including SQL query language, Select statements, sorting & filtering, database functions, accessing multiple tables

Join Free: Data Science Fundamentals with Python and SQL Specialization

Specialization - 5 course series

Introduction to Data Science Specialization

Python Coding March 06, 2024 Books, Coursera, Data Science No comments

What you'll learn

Describe what data science and machine learning are, their applications & use cases, and various types of tasks performed by data scientists

Gain hands-on familiarity with common data science tools including JupyterLab, R Studio, GitHub and Watson Studio

Develop the mindset to work like a data scientist, and follow a methodology to tackle different types of data science problems

Write SQL statements and query Cloud databases using Python from Jupyter notebooks

Join Free: Introduction to Data Science Specialization

Specialization - 4 course series

Popular Posts

-

This textbook grew out of notes for the ECE143 Programming for Data Analysis class that the author has been teaching at University of Cali...

-

1. What is the output of following Python code? a = 'a' print(int(a, 16)) Solution and Explanation: Let's break down the expr...

-

Introduction to Modern Statistics is a re-imagining of a previous title, Introduction to Statistics with Randomization and Simulation. The...

-

What you'll learn Gain an immersive understanding of the practices and processes used by a junior or associate data analyst in their d...

-

Code: g = [1, 2, 3] h = [1, 2, 3] print(g is h) print(g == h) Solution and Explanation: In Python, the expressions g = [1, 2, 3] and h ...

-

Code: str_a = "hello" str_b = "hello" print(str_a is str_b) print(str_a == str_b) Solution and Explanation: In Pytho...

-

Code: dict_a = {"a": 1, "b": 2} dict_b = {"a": 1, "b": 2} print(dict_a is dict_b) print(dict_a == ...

-

Code: num = [7, 8, 9] *mid, last = num[:-1] print(mid, last) Solution and Explanation: let's break down the code: num = [7, 8, 9] *m...

-

Code: h = [5, 6, 7, 8] h.pop() h.pop(0) print(h) Solution and Explanation: Let's break down the given Python code and explain what ea...

-

import statsmodels.api as sm import numpy as np # Generate some sample data x = np.random.rand(100) y = 2 * x + np.random.randn(100) # Fit...

.png)