Wednesday, 5 November 2025

Generative Deep Learning with TensorFlow

Python Developer November 05, 2025 Deep Learning No comments

Introduction

Generative deep learning is a rapidly advancing area of AI that focuses on creating data — images, text, audio — rather than just making predictions. This course on Coursera is part of the TensorFlow: Advanced Techniques Specialization and is designed to teach you how to build, train and deploy generative models using the TensorFlow framework. If you want to go beyond standard classification/regression tasks and learn how to generate new content, this course is a strong choice.

Why This Course Matters

-

Generative models (e.g., style transfer, autoencoders, GANs) open up creative and practical applications — from artistic image creation to synthetic data generation for training.

-

The course uses TensorFlow, a production-ready and industry-standard deep learning framework, so the skills you gain are applicable in real projects.

-

It offers a structured path through cutting-edge topics (style transfer, autoencoders, generative adversarial networks) rather than just theory.

-

For learners who already have a foundation in deep learning or TensorFlow, this course acts as a natural step to specialise in generative AI.

What You’ll Learn

The course is divided into modules that cover different generative tasks. According to the syllabus:

Module 1: Style Transfer

You’ll learn how to extract the content of one image (for example, a photo of a swan) and the style of another (for example, a painting), then combine them into a new image. The technique uses transfer learning and deep convolutional neural networks to extract features.

Assignments and labs allow you to experiment with style transfer in TensorFlow.

Module 2: Autoencoders & Representation Learning

You’ll explore autoencoders: neural networks designed to compress input (encoding) and decompress it (decoding), learning meaningful latent representations. This is foundational for many generative tasks. (Module listing includes autoencoders)

Module 3: Generative Adversarial Networks (GANs)

You’ll dive into GANs — models with a generator and a discriminator network — that create realistic synthetic data. You’ll learn how they are built in TensorFlow, trained, and evaluated. (Mentioned among generative model architectures in course search)

Module 4: Advanced Generative Models & Applications

You’ll apply generative modelling to real-world datasets and use TensorFlow tools to customise, tune and deploy generative systems. The labs and assignments allow you to build complete pipelines from data preparation, model building, training, evaluation to output generation.

Who Should Take This Course

This course is ideal for:

-

Learners who already know Python and have some familiarity with deep learning (e.g., CNNs, RNNs) and want to specialise in generative modelling.

-

Data scientists, ML engineers or developers who want to add generative capabilities (image/text/audio generation) to their skill-set.

-

Researchers or hobbyists interested in creative AI — building tools that generate art, augment data, or synthesize content.

-

Anyone who has completed a foundational deep-learning course or worked with TensorFlow and wants to go further.

If you’re completely new to deep learning or TensorFlow, you may find the content challenging; it benefits from some prior experience.

How to Get the Most Out of It

-

Set up your environment early: Make sure you can run TensorFlow (preferably GPU-enabled) and access Jupyter notebooks or Colab.

-

Work hands-on: As you learn each generative model type (style transfer, autoencoder, GAN), type the code yourself and experiment with parameters, data and architecture.

-

Build mini-projects: After each module, choose a small project: e.g., apply style transfer to images from your phone; build an autoencoder for your own dataset; build a simple GAN.

-

Understand the theory behind models: Generative models involve special training dynamics (e.g., GAN instability, mode collapse, representation bottlenecks). Take time to understand these challenges.

-

Explore deployment and output usage: Generative model output often serves as input to other systems (art creation, content pipelines). Try to connect model output to downstream use: save generated images, build a simple web interface or pipeline.

-

Keep a portfolio: Track your model outputs, the changes you made, the results you achieved. A study notebook or GitHub repo can help you showcase your generative modelling skills.

What You’ll Walk Away With

After completing the course, you should be able to:

-

Implement style transfer models and generate blended images combining content and style.

-

Build autoencoders in TensorFlow, understand latent representations and reconstruct inputs.

-

Develop, train and evaluate GANs and other generative frameworks with TensorFlow.

-

Understand the practical nuances of generative model training: loss functions, stability, architecture choices.

-

Use TensorFlow’s APIs and integrate generative model pipelines into your projects or workflows.

-

Demonstrate generative modelling skills in your portfolio — potentially a distinguishing factor for roles in AI research, creative AI, data augmentation, synthetic data generation.

Join Now: Generative Deep Learning with TensorFlow

Conclusion

Generative Deep Learning with TensorFlow is a compelling course for anyone who wants to go beyond predictive modelling and dive into the creative side of machine learning. By focusing on generative architectures, hands-on projects and a professional deep-learning framework, it gives you both the how and the why of generative AI. If you’re ready to push your skills from “understanding deep learning” to “creating new data and content”, this course is a strong step forward.

Python Essentials for MLOps

Python Developer November 05, 2025 Machine Learning, Python No comments

Introduction

In modern AI/ML workflows, knowing how to train a model is only part of what’s required. Equally important is being able to operate, deploy, test, maintain, and automate machine-learning systems. That’s the domain of MLOps (Machine Learning Operations). The “Python Essentials for MLOps” course is designed specifically to equip learners with the foundational Python skills needed to thrive in MLOps roles: from scripting and testing, to building command-line tools and HTTP APIs, to managing data with NumPy and Pandas. If you’re a developer, data engineer, or ML practitioner wanting to move into production-ready workflows, this course offers a strong stepping stone.

Why This Course Matters

-

Many ML-centric courses stop at models; this one bridges into operations — the work of making models work in real systems.

-

Python remains the lingua franca of data science and ML engineering. Gaining robust competence in Python scripting, testing, data manipulation, and APIs is essential for MLOps roles.

-

As organisations deploy more ML into production, there’s growing demand for engineers who understand not just modelling, but the full lifecycle — and this course prepares you to be part of that lifecycle.

-

The course is intermediate-level, making it suitable for those who already know basic Python but want to specialise towards MLOps.

What You’ll Learn

The course is structured into five modules with hands-on assignments, labs and quizzes. Key themes include:

Module 1: Introduction to Python

You’ll learn how to use variables, control logic, and Python data structures (lists, dictionaries, sets, tuples) for tasks like loading, iterating and persisting data. This sharpens your scripting skills foundational to any automation.

Module 2: Python Functions and Classes

You’ll move into defining functions, classes, and methods — organizing code for reuse, readability and maintainability. These are the building blocks of larger, robust systems.

Module 3: Testing in Python

A crucial but often overlooked area: you will learn how to write, run and debug tests using tools like pytest, ensuring your code doesn’t just run but behaves correctly. For MLOps this is indispensable.

Module 4: Introduction to Pandas and NumPy

You’ll work with data: loading datasets, manipulating, transforming, visualizing. Using Pandas and NumPy you’ll apply data operations typical in ML pipelines — cleaning, manipulating numerical arrays and frames.

Module 5: Applied Python for MLOps

You’ll bring it all together by building command-line tools and HTTP APIs that wrap ML models or parts of ML workflows. You’ll learn how to expose functionality via APIs and automate tasks — bringing scripting into operationalisation.

Each module includes video lectures, readings, hands-on labs, and assignments to reinforce the material.

Who Should Take This Course?

This course is ideal for:

-

Developers or engineers who know basic Python and want to specialise into ML operations or production-ready ML systems.

-

Data scientists who have built models but lack experience in the “ops” side — deployment, scripting, automation, API integration.

-

Data engineers or devops engineers wanting to add ML to their workflow and need strong Python / ML pipeline scripting skills.

-

Students or self-learners preparing for MLOps roles and wanting a structured, project-driven introduction.

If you are completely new to programming or have no Python experience, you might find some sections fast-paced; it helps to have fundamental Python familiarity before starting.

How to Get the Most Out of It

-

Install and use your tools: Set up Python environment (virtualenv or conda), install Pandas, NumPy, pytest, and try all examples yourself.

-

Code along: When the course shows an example of writing a class or building an API, pause and try to write your own variant.

-

Build a mini-project: For example, build a small script that loads data via Pandas, computes a metric and exposes it via an HTTP endpoint (Flask or FastAPI) — from module 4 into module 5.

-

Write tests: Use pytest to test your functions and classes. This will solidify your understanding of testing and robustness.

-

Document your work: Keep a notebook or GitHub repo of assignments, labs, code you write. This becomes a portfolio of your MLOps scripting skills.

-

Connect to ML workflows: Even though this course is Python-centric, always ask: how would this script or API fit into a larger ML pipeline? This mindset will help you later.

-

Revisit and reflect: Modules with data manipulation or API building may require multiple pass-throughs — work slowly until you feel comfortable.

What You’ll Walk Away With

After completing this course you should be able to:

-

Use Python proficiently for scripting, data structures, functions and classes.

-

Write and debug tests (pytest) to validate your code and ensure robustness.

-

Manipulate data using Pandas and NumPy — cleaning, transforming, visualising.

-

Build command-line tools and HTTP APIs to wrap or expose parts of ML workflows.

-

Understand how your scripting and tooling skills contribute to MLOps pipelines (automation, deployment, interfaces).

-

Demonstrate these skills via code examples, mini-projects and a GitHub portfolio — which is valuable for roles in ML engineering and MLOps.

Join Now: Python Essentials for MLOps

Conclusion

Python Essentials for MLOps is a practical and timely course for anyone ready to move from model experimentation into operational ML systems. It focuses on the engineering side of ML workflows: scripting, data manipulation, testing and API engineering — all in Python. For those aiming at MLOps or ML-engineering roles, completing this course gives you core skills that are increasingly in demand.

Skill Up with Python: Data Science and Machine Learning Recipes

Python Developer November 05, 2025 Data Science, Machine Learning, Python No comments

Skill Up with Python: Data Science & Machine Learning Recipes

Introduction

In the present data-driven world, knowing Python alone isn’t enough. The power comes from combining Python with data science, machine learning and practical workflows. The “Skill Up with Python: Data Science and Machine Learning Recipes” course on Coursera offers exactly that: a compact, project-driven introduction to Python for data-science and ML tasks—scraping data, analysing it, building machine-learning components, handling images and text. It’s designed for learners who have some Python background and want to apply it to real-world ML/data tasks rather than purely theory.

Why This Course Matters

-

Hands-on, project-centric: Rather than long theory modules, this course emphasises building tangible skills: sentiment analysis, image recognition, web scraping, data manipulation.

-

Short and focused: The course is only about 4 hours long, making it ideal as a fast up-skill module.

-

Relevance for real-world tasks: Many data science roles involve cleaning/scraping data, analysing text/image/unstructured data, building quick ML pipelines. This course directly hits those points.

-

Good fit for career-readiness: For developers who know Python and want to move toward data science/ML roles, or data analysts wanting to expand into ML, this course gives a rapid toolkit.

What You’ll Learn

Although short, the course is structured with a module that covers multiple “recipes.” Here’s a breakdown of the content and key skills:

Module: Python Data Science & ML Recipes

-

You’ll set up your environment, learn to work in Jupyter Notebooks (load data, visualise, manipulate).

-

Data manipulation and visualisation using tools like Pandas.

-

Sentiment analysis: using libraries like NLTK to process text, build a sentiment-analysis pipeline (pre-processing text, tokenising, classifying).

-

Image recognition: using a library such as OpenCV to load/recognise images, build a simple recognition workflow.

-

Web scraping: using Beautiful Soup (or similar) to retrieve web data, parse and format for further analysis.

-

The course includes 5 assignments/quizzes aligned to these: manipulating/visualising data, sentiment analysis task, image recognition task, web scraping task, and final assessment.

-

By the end, you will have tried out three concrete workflows (text, image, web-data) and seen how Python can bring them together.

Skills You Gain

-

Data manipulation (Pandas)

-

Working in Jupyter Notebooks

-

Text mining/NLP (sentiment analysis)

-

Image analysis (computer vision basics)

-

Web scraping (unstructured to structured data)

-

Basic applied machine learning pipelines (data → feature → model → result)

Who Should Take This Course?

-

Python programmers who have the basics (syntax, data types, logic) and want to expand into data science and ML.

-

Data analysts or professionals working with data who want to add machine-learning and automated workflows.

-

Students or career-changers seeking a quick introduction to combining Python + ML/data tasks for projects.

-

Developers or engineers looking to add “data/ML” to their toolkit without committing to a long specialization.

If you are brand new to programming or have no Python experience, you might find the modules fast-paced, so you might prepare with a basic Python/data-analysis course first.

How to Get the Most Out of It

-

Set up your environment early: install Python, Jupyter Notebook, Pandas, NLTK, OpenCV, Beautiful Soup so you can code along.

-

Code actively: When the instructor demonstrates sentiment analysis or image recognition, don’t just watch—pause, type out code, change parameters, try new data.

-

Extend each “recipe”: After you complete the built-in assignment, try modifying it: e.g., use a different text dataset, build a classifier for image types you choose, scrape a website you care about.

-

Document your work: Keep the notebooks/assignments you complete, note down what you changed, what worked, what didn’t—this becomes portfolio material.

-

Reflect on “what next”: Since this is a short course, use it as a foundation. Ask: what deeper course am I ready for? What project could I build?

-

Combine workflows: The course gives separate recipes; you might attempt to combine them: e.g., scrape web data, analyse text, visualise results, feed into a basic ML model.

What You’ll Walk Away With

After finishing the course you should have:

-

A practical understanding of how to use Python for data manipulation, visualization and basic ML tasks.

-

Experience building three distinct pipelines: sentiment analysis (text), image recognition (vision), and web data scraping.

-

Confidence using Jupyter Notebooks and libraries like Pandas, NLTK, OpenCV, Beautiful Soup.

-

At least three small “recipes” or mini-projects you can show or build further.

-

A clearer idea of what area you’d like to focus on next (text/data, image/vision, web scraping/automation) and what deeper course to pursue next.

Join Now: Skill Up with Python: Data Science and Machine Learning Recipes

Conclusion

Skill Up with Python: Data Science and Machine Learning Recipes is a compact yet powerful course for those wanting to move quickly into applied Python-based data science and ML workflows. It strikes a balance between breadth (text, image, web data) and depth (hands-on assignments), making it ideal for mid-level Python programmers or data analysts looking to add machine learning capability.

Tuesday, 4 November 2025

Python Coding Challenge - Question with Answer (01051125)

Python Coding November 04, 2025 Python Quiz No comments

Step 1:

count = 0

We start with a variable count and set it to 0.

Step 2:

for i in range(1, 5):range(1,5) means numbers from 1 to 4

(remember, Python stops 1 number before 5)

So the loop runs with:

i = 1i = 2i = 3i = 4

Step 3:

count += i means:

count = count + iSo step-by-step:

| i | count (before) | count = count + i | count (after) |

|---|---|---|---|

| 1 | 0 | 0 + 1 | 1 |

| 2 | 1 | 1 + 2 | 3 |

| 3 | 3 | 3 + 3 | 6 |

| 4 | 6 | 6 + 4 | 10 |

Step 4:

print(count) prints 10

✅ Final Output:

Monday, 3 November 2025

Deep Learning with Python, Third Edition

Python Developer November 03, 2025 Deep Learning No comments

Introduction

Deep learning has transformed how we build intelligent systems — from image recognition to language understanding and generative models. This book brings you right into the heart of those transformations. In the third edition, the authors expand widely: covering not only the fundamentals of neural networks but also generative AI, transformers, LLMs, and modern frameworks like Keras 3, PyTorch and JAX. It’s designed for developers, data scientists, and machine-learning practitioners who want to go beyond basic models and build state-of-the-art deep-learning workflows in Python.

Why This Book Matters

-

It is authored by François Chollet — creator of the Keras library — giving you insights from someone who helped shape the deep-learning ecosystem.

-

It updates and expands on earlier editions with modern deep-learning topics: building your own GPT-style models, diffusion models for image generation, time-series forecasting, segmentation, object detection.

-

It covers multiple frameworks (Keras, TensorFlow, PyTorch, JAX) so you’re not locked into one tooling path.

-

It balances theory and practice: you’ll get code-first examples, layer-by-layer explanations, then full projects.

-

It’s suitable for developers with intermediate Python skills but no prior deep-learning or heavy linear-algebra background — the authors aim to make deep learning approachable.

What the Book Covers

Here is a breakdown of major content and how you’ll traverse through the material:

Part I: Foundations

-

It begins with “What is deep learning?” — clarifying how it fits within AI/machine learning, what makes it unique, and touching on generative AI trends.

-

The mathematical building blocks: tensors, tensor operations, gradient-based optimization, backpropagation (chapter on “The mathematical building blocks of neural networks”).

-

A primer on frameworks — Keras, TensorFlow, PyTorch, JAX — how to set up your environment, understand APIs, and choose tools.

Part II: Basic Workflows

-

Classification and regression tasks: standard supervised learning setups, moving from simple datasets to more complex ones.

-

Fundamentals of machine learning: setting up experiments, feature engineering, evaluation, overfitting/underfitting.

-

A deep dive on Keras: model definition, training loops, callbacks, model saving and reuse.

Part III: Core Deep-Learning Architectures & Applications

-

Image classification: convolutional neural networks (CNNs), convolution blocks, architectures, standard patterns.

-

Convolution network architecture patterns: bottlenecks, residual connections, mobile nets, efficient nets.

-

Interpreting what ConvNets learn: visualizing activations, feature maps, class saliency, model introspection.

-

Image segmentation and object detection: U-Nets, mask R-CNN, anchor boxes, bounding-box regression.

-

Time-series forecasting: recurrent networks (RNNs), LSTMs, sequence models; applying them to forecasting problems.

-

Text classification: tokenization, embeddings, sequence models; moving to language models and the Transformer architecture.

-

Language models and the Transformer: building your own GPT-style model, attention, sequence generation.

-

Text generation and image generation: diffusion models, generative adversarial networks (GANs), image-generation pipelines.

-

Best practices for the real world: model tuning, deployment, scalability, hardware/compute considerations, monitoring and maintenance.

-

The future of AI: limitations of deep learning, emerging directions, how to stay current in a fast-moving field.

Part IV: Framework, Tools & Code

-

The book includes code examples for nearly every chapter; Jupyter notebooks are available online (GitHub repository by the author) so you can follow along, modify and experiment.

-

It covers how to run code across the frameworks (Keras, TensorFlow, PyTorch, JAX) so you can select what fits your project.

-

Code examples also show dataset loading, preprocessing, augmentation, training loops, evaluation and visualisation.

Who Should Read This Book?

-

Developers with intermediate Python skills who want to transition into deep-learning development.

-

Data scientists familiar with machine-learning basics (regression, classification) who want to deepen into deep learning and generative AI.

-

ML engineers needing to understand modern frameworks and production workflows (deployment, tuning, architecture).

-

Hobbyists and learners interested in building systems like image-generators, chatbots, language-models, forecasting tools.

If you have no programming experience or are very new to machine-learning/math, you may find some parts (especially architecture, time-series, generative models) challenging—but the book is designed to be accessible enough to bring you up.

How to Get the Most Out of It

-

Set up your environment early: install Python, set up virtual env or conda, install Keras/TensorFlow/PyTorch/JAX so you can run code hands-on.

-

Work through examples: As you read chapters, type in or clone the notebook code, run it, modify parameters, datasets, architecture.

-

Experiment: For image or text models, change dataset, change model depth, change hyperparameters. See how model behaviour changes.

-

Follow the notebook repository: The author maintains GitHub notebooks; using them helps you see full workflows and allows you to focus on learning rather than boilerplate setup.

-

Apply any concept to a mini-project: For example after the image-generation chapter build a small diffusion-model for your own image dataset. After time-series chapter apply forecasting to a dataset you care about.

-

Reflect on real-world best practices: When you reach the deployment/real-world chapter, try to consider how you would move from notebook to production: saving model, serving API, handling compute/latency, version control.

-

Revisit challenging topics: Transformer/LLM chapters or generative image chapters may need multiple readings and code experiments.

-

Document your work: Keep a portfolio of projects with notes: dataset, model, your modifications, results, lessons learned.

Key Takeaways

-

Deep learning is accessible: even if you’re not deeply mathematical, you can build applied systems with the right guidance and code-first approach.

-

Modern deep learning is multi-framework: the book emphasises Keras-first but also shows PyTorch and JAX, giving you flexibility.

-

Real-world deep-learning is not just architecture: data processing, augmentation, model tuning, deployment, monitoring matter just as much.

-

Generative AI is now central: building your own text generators, image generators, language models isn’t just research—it’s practical.

-

Staying current is key: tools change (Keras 3, JAX), architectures evolve (transformers, diffusion), so the book’s future-oriented chapters are vital.

Hard Copy: Deep Learning with Python, Third Edition

Kindle: Deep Learning with Python, Third Edition

Conclusion

Deep Learning with Python, Third Edition is a powerful and up-to-date guide for anyone wanting to go deep into deep learning using Python. Whether you’re a data scientist, developer, or curious learner, it gives you both the fundamental understanding and practical workflows to build intelligent systems—from classification to generative models. With code, explanation, and real projects, this book is a strong companion for your deep-learning journey.

What’s Really Going On in Machine Learning? Some Minimal Models (Stephen Wolfram Writings ePub Series)

Python Developer November 03, 2025 Machine Learning No comments

Introduction

In this thought-provoking work, Stephen Wolfram explores a central question in modern artificial intelligence: why do machine-learning systems work? We have built powerful neural networks, trained them on massive datasets, and achieved remarkable results. Yet at a fundamental level, the inner workings of these systems remain largely opaque. Wolfram argues that to understand ML deeply, we must strip it down into minimal models—simplified systems we can peer inside—and thereby reveal what essential phenomena underlie ML success.

Why This Piece Matters

-

It challenges the dominant view of neural networks and deep learning as black-boxes whose success depends on many tuned details. Wolfram proposes that much of the power of ML comes not from finely-engineered mechanisms, but from the fact that many simple systems can learn and compute the right thing given enough capacity, data and adaptation.

-

It connects ML to broader ideas of computational science—specifically his earlier work on cellular automata and computational irreducibility. He suggests that ML may succeed precisely because it harnesses the “computational universe” of possible programs rather than builds interpretable handcrafted algorithms.

-

This perspective has important implications for explainability, model design, and future research: if success comes from the “sea” of possible computations rather than neatly structured reasoning modules, then interpretability, modularity and “understanding” may inherently be limited.

What the Essay Covers

1. The Mystery of Machine Learning

Wolfram begins by observing how, despite the engineering advances in deep learning, we still lack a clear scientific explanation of why neural networks perform so well in many tasks. He points out how much of the current understanding is empirical and heuristic—“this works”, “that architecture trains well”—but lacks a conceptual backbone.

He asks: what parts of neural-net design are essential, which are legacy, and what can we strip away to find the core?

2. Traditional Neural Nets & Discrete Approximation

Wolfram shows how even simple fully-connected multilayer perceptrons can reproduce functions he defines, and then goes on to discretize weights and biases (i.e., quantizing parameters) to explore how essential real-valued precision is. He finds that discretization doesn’t radically break the learning: the system still works. This suggests that precise floating-point weights may not be the critical feature—rather, the structure and adaptation matter more.

3. Simplifying the Topology: Mesh Neural Nets

Next, he reduces the neural-net topology: instead of fully connected layers, he uses a “mesh” architecture where each neuron is connected only to a few neighbours—much like nodes in a cellular automaton. He shows these mesh-nets can still learn the target function. The significance: the connectivity and “dense architecture” may be less essential than commonly believed.

4. Discrete Models & Biological-Evolution Analog

Wolfram then dives further: what if one uses completely discrete rule-based systems—cellular automata or rule arrays—that learn via mutation/selection rather than gradient descent? He finds that even such minimal discrete adaptive systems can replicate ML-style learning: gradually evolving rules, selecting based on a fitness measure, and arriving at solutions that compute the desired function. Crucially, no calculus-based gradient descent is required.

5. Machine Learning in Discrete Rule Arrays

He defines “rule arrays” analogous to networks: each location/time step has a rule that is adapted through mutation to achieve a goal. He shows how layered rule arrays or spatial/time varying rules lead to behavior analogous to neural networks and ML training. Importantly: the system does not build a neatly interpretable “algorithm” in the usual sense—it just finds a program that works.

6. What Does This Imply?

Here are some of his major conclusions:

-

Many seemingly complex ML systems may in effect be “sampling the computational universe” of possible programs and selecting ones that approximate the desired behavior—not building an explicit mechanistic module.

-

Because of this, explainability may inherently be limited: if the result is just “some program from the universe that works”, then trying to extract a neat human-readable algorithm may not succeed or may degrade performance.

-

The success of ML may depend on having enough capacity, enough adaptation, and enough diversity of candidate programs—not necessarily on highly structured or handcrafted algorithmic modules.

-

For future research, one might focus on understanding the space of programs rather than individual network weights: which programs are reachable, what their basins of attraction are during training, how architecture biases the search.

Key Take-aways

-

Neural networks may work less like carefully crafted algorithms and more like systems that find good-enough programs in a large space of candidates.

-

Simplification experiments (mesh nets, discrete rule systems) show that many details (dense connectivity, real-valued weights, gradient descent) may be convenient engineering choices rather than fundamental necessities.

-

The idea of computational irreducibility (that many simple programs produce complex behavior that cannot be easily reduced or simplified) suggests that interpretability may face a fundamental limit: one cannot always extract a tidy “logic” from a trained model.

-

If you’re designing ML or deep learning systems, architecture choice, training regime, data volume matter—but also perhaps the diversity of computational paths the system might explore matters even more.

-

From a research perspective, minimal models (cellular automata, rule arrays) offer a test-bed to explore fundamentals of ML theory, which might lead to new theoretical insights or novel lightweight architectures.

Why You Should Read This

-

If you’re curious not just about how to use machine learning but why it works, this essay provides a fresh and deeply contemplative viewpoint.

-

For ML researchers and theorists, it offers new directions: exploring minimal models, studying program-space rather than just parameter-space.

-

For practitioners and engineers, it provides a caution and an inspiration: caution in assuming interpretability and neat modules; inspiration to think about architecture, adaptation and search space.

-

Even if the minimal systems explored are far from production-scale (Wolfram makes that clear), they challenge core assumptions and invite us to think differently.

Kindle: What’s Really Going On in Machine Learning? Some Minimal Models (Stephen Wolfram Writings ePub Series)

Conclusion

What’s really going on in machine learning? Stephen Wolfram’s minimal-model exploration suggests a provocative answer: ML works not because we’ve built perfect algorithms, but because we’ve built large, flexible systems that can explore a vast space of possible programs and select the ones that deliver results. The systems that learn may not produce neat explanations—they just produce practical behavior. Understanding that invites us to rethink architecture, interpretability, training and even the future of AI theory.

The AI Engineering Bible for Developers: Essential Programming Languages, Machine Learning, LLMs, Prompts & Agentic AI. Future Proof Your Career In the Artificial Intelligence Age in 7 Days

Python Developer November 03, 2025 AI, Machine Learning No comments

The AI Engineering Bible for Developers: A Developer’s Guide to Building & Future-Proofing AI Systems

Introduction

We are living in an era where artificial intelligence (AI) is no longer a niche research topic — it’s becoming central to products, services, organisations and systems. For software developers and engineers, the challenge is not just “how to train a model” but “how to build, integrate, deploy and maintain AI systems that perform in the real world.” The AI Engineering Bible for Developers aims to fill that gap: it presents a holistic view of AI engineering — including programming languages, machine learning, large language models (LLMs), prompt engineering, agentic AI — and frames it as a career-proof path for developers in the age of AI. It promises a rapid journey (in seven days) to core knowledge that helps you “future-proof your career”.

Why This Book Matters

-

Bridging the gap between ML/AI research and software engineering: Many engineers know programming but not how to build AI systems; many AI researchers know models but not how to deploy them at scale. This book speaks to developers who want to specialise in AI engineering.

-

Coverage of modern AI trends: With LLMs, agentic AI, prompt engineering and production systems being key in 2024-25, the book appears to include these, thereby aligning with what organisations are actively working on.

-

Developer-centric: It is pitched at “developers” — meaning you don’t have to be a PhD in ML to engage with it. It focuses on programming, tools and system integration, which is practical for job readiness.

-

Career-orientation: The “future proof your career” tagline suggests this book also deals with what skills engineers must have to stay relevant as AI becomes more embedded in software.

-

Rapid learning format: The “7-day” claim may be ambitious, but it signals that the book is structured as an intensive guide — useful for accelerated learning or as a refresher for experienced developers.

What the Book Covers

Based on available descriptions and positioning, you can expect the following major themes and sections (though note: the exact chapter list may vary).

1. Programming Languages & Foundations

The book likely starts with revisiting programming languages and tooling relevant to AI engineering — for example:

-

Python (almost a default for ML/AI)

-

Supporting libraries and frameworks (e.g., NumPy, Pandas, Sci-Kit-Learn, PyTorch, TensorFlow)

-

Version control, environment management, DevOps basics for AI

This sets up the developer side of the stack.

2. Machine Learning & LLMs

Next, the book likely covers the core machine-learning workflow: data, features, models, evaluation — but then extends into the world of Large Language Models (LLMs), which are now central to many AI applications:

-

What LLMs are, how they differ from classical ML models

-

Basics of prompt engineering — how to get the best out of LLMs

-

When to fine-tune vs use APIs

-

Integrating LLMs into applications (chatbots, assistants, text generation)

By giving you both the “foundation ML” and “next-gen LLM” coverage, the book helps you cover a broad spectrum.

3. Agentic AI & Autonomous Systems

One of the more advanced topics is “agentic AI” — systems that don’t just respond to prompts but take actions, plan and operate autonomously. The book presumably covers:

-

What agents are, difference between reactive models vs agents that plan

-

Architectures for agentic systems (perception, decision, action loops)

-

Use cases (e.g., autonomous assistants, bots, workflow automation)

-

Challenges such as safety, alignment, scalability, maintenance

This is where the “future-proofing” part becomes very relevant.

4. Prompt Engineering, Deployment & Production-Engineering

Building AI systems is more than coding a model. The book likely includes sections on:

-

Prompt design and best practices: how to craft prompts to get good results from LLMs

-

Integration: APIs, SDKs, system architecture, microservices

-

Deployment: how to package, containerise, serve models, monitor and maintain them

-

Scaling: handling latency, throughput, cost, model updates

-

Ethics, governance, security: dealing with bias, misuse, drift

These sections help turn prototype models into real systems.

5. Career Skills & Developer Mindset

As the title promises “future proof your career”, there’s likely content on:

-

What employers look for in AI engineers

-

Skills roadmap: from developer → ML engineer → AI engineer → AI architect

-

How to stay current (tools, frameworks, model families)

-

Building a portfolio, contributing to open source, problem-solving mindset

-

Understanding the AI ecosystem: data, compute, models, infrastructure

This helps you not just build systems, but position yourself for evolving roles.

Who Should Read This Book?

-

Software developers familiar with coding who want to specialise in AI, not just “add a bit of ML” but become deeply capable in AI engineering.

-

ML engineers who work primarily on models but want to broaden into production systems, agents and full-stack AI engineering.

-

Technical leads or architects who need to understand the broader AI engineering stack — how models, data, infrastructure and business outcomes connect.

-

Students or career-changers aiming to move into AI engineering roles and wanting a structured guide that covers modern LLMs and agents.

If you have very little programming experience or are unfamiliar with basic machine learning concepts, you may find parts of the book fast-paced — but it could still serve as a roadmap to what you need to learn.

How to Get the Most Out of It

-

Read actively: Keep a coding environment ready — when examples or concepts are presented, stop and code them or sketch ideas.

-

Apply real code: For sections on prompt engineering or agentic systems, experiment with open-source LLMs (Hugging Face, OpenAI APIs, etc.) and build small prototypes.

-

Build a mini project: After reading about agents or production deployment, attempt a small end-to-end system: e.g., a text-based assistant, or a workflow automation agent.

-

Document your learning: Create a portfolio of what you build — prompts you designed, agent design diagrams, deployment pipelines.

-

Reflect on career growth: Use the book’s roadmap to identify what skills you need, set goals (e.g., learn Docker + Kubernetes, learn Hugging Face inference, build RAG system).

-

Stay current: Because AI evolves quickly, use the book as a base but follow up with recent articles, model release notes, tooling updates.

What You’ll Walk Away With

After reading and applying this book, you should walk away with:

-

A developer-focused understanding of AI engineering — how to build models, integrate them into systems and deploy at scale.

-

Proficiency with LLMs, prompt engineering, and agentic AI — not just theory, but practice.

-

A mini-portfolio of coded prototypes or applications demonstrating your capability.

-

An actionable roadmap for your career progression in AI engineering.

-

Awareness of the challenges in AI systems (scaling, monitoring, drift, ethics) and how to address them.

-

Confidence to position yourself for roles such as AI Developer, AI Engineer, AI Architect or Lead Engineer in an AI-centric organisation.

Hard Copy: The AI Engineering Bible for Developers: Essential Programming Languages, Machine Learning, LLMs, Prompts & Agentic AI. Future Proof Your Career In the Artificial Intelligence Age in 7 Days

Kindle: The AI Engineering Bible for Developers: Essential Programming Languages, Machine Learning, LLMs, Prompts & Agentic AI. Future Proof Your Career In the Artificial Intelligence Age in 7 Days

Conclusion

The AI Engineering Bible for Developers is a timely and practical book for developers who want to evolve into AI engineers — not just building models, but software systems that leverage AI, large language models and autonomous agents. Its mix of programming, model-tech, system-tech and career guidance makes it a strong choice for anyone serious about staying ahead in the AI transformation.

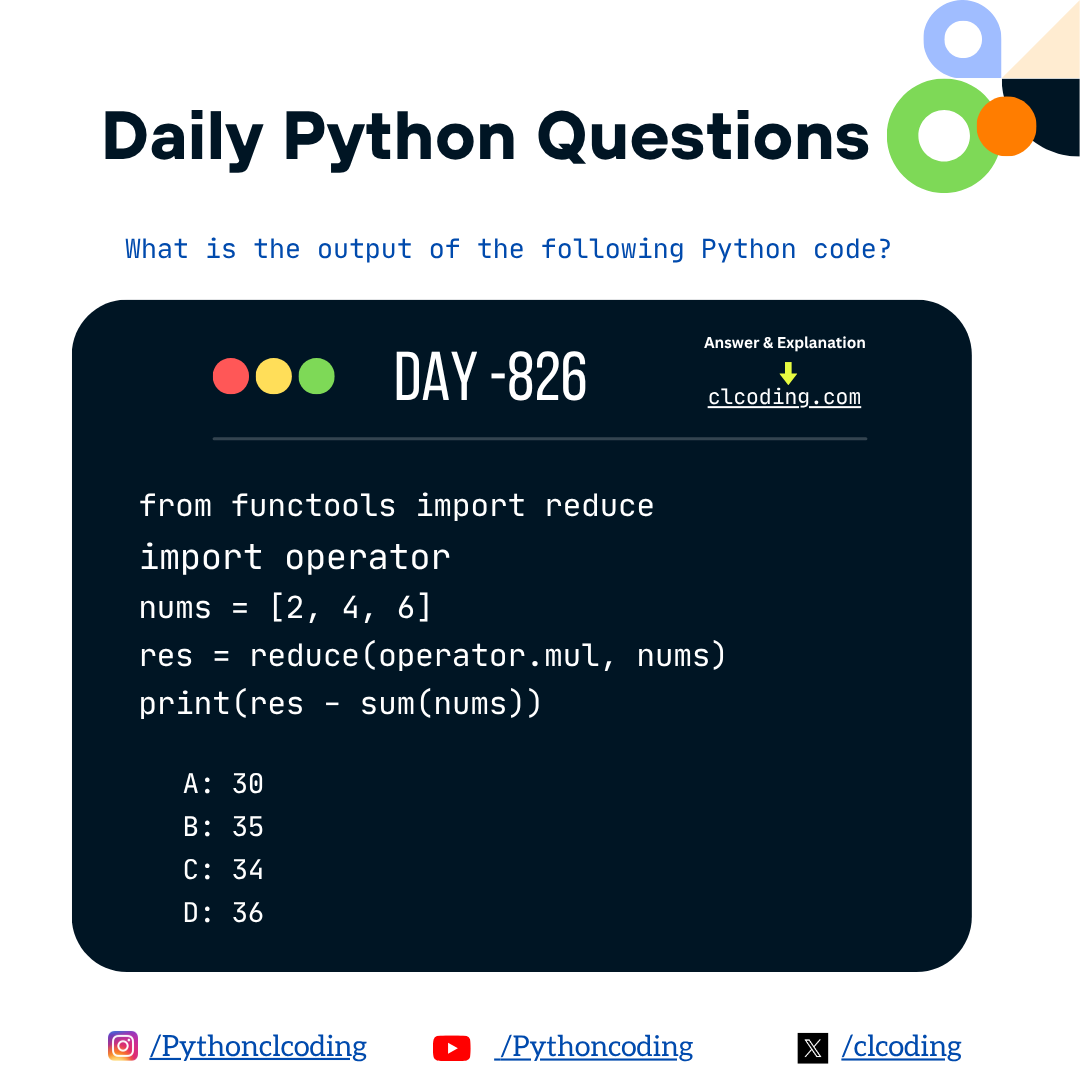

Python Coding challenge - Day 826| What is the output of the following Python Code?

Python Developer November 03, 2025 Python Coding Challenge No comments

Code Explantion:

Python Coding challenge - Day 825| What is the output of the following Python Code?

Python Developer November 03, 2025 Python Coding Challenge No comments

Code Explantion:

700 Days Python Coding Challenges with Explanation

Python Coding Challenge - Question with Answer (01041125)

Python Coding November 03, 2025 Python Quiz No comments

Step-by-step explanation:

range(3) → gives numbers 0, 1, 2.

-

The loop runs three times — once for each i.

-

Inside the loop:

-

The first statement is continue.

continue immediately skips the rest of the loop for that iteration.

-

So print(i) is never reached or executed.

-

Output:

(no output)💡 Key point:

When Python hits continue, it jumps straight to the next iteration of the loop — skipping all remaining code below it for that cycle.

So even though the loop runs 3 times, print(i) never runs at all.

Application of Python Libraries in Astrophysics and Astronomy

Sunday, 2 November 2025

Complete Data Science,Machine Learning,DL,NLP Bootcamp

Python Developer November 02, 2025 Data Science, Machine Learning, Udemy No comments

Introduction

In today’s data-driven world, the demand for professionals who can extract insights from data, build predictive models, and deploy intelligent systems is higher than ever. The “Complete Data Science, Machine Learning, DL, NLP Bootcamp” is a comprehensive course that aims to take you from foundational skills to advanced applications across multiple domains: data science, machine learning (ML), deep learning (DL), and natural language processing (NLP). By the end of the course, you should be able to work on real-world projects, understand the theory behind algorithms, and use industry-standard tools.

Why This Course Matters

-

Breadth and depth: Many courses focus on one domain (e.g., ML or DL). This course covers data science, ML, DL, and NLP in one unified path, giving you a wide-ranging skill set.

-

Ground to advanced level: Whether you are just beginning or you already know some Python and want to level up, this course is structured to guide you through basics toward advanced topics.

-

Applied project focus: It emphasises hands-on work — not just theory but real code, real datasets, and end-to-end workflows. This makes it more practical for job readiness or building a portfolio.

-

Industry-relevant tools: The course engages with Python libraries (Pandas, NumPy, Scikit-Learn), deep-learning frameworks (TensorFlow, PyTorch), and NLP tools — equipping you with tools you’ll use in real jobs.

-

Multi-domain skill set: Because ML and NLP are increasingly integrated (e.g., in chatbots, speech analytics, recommendation systems), having skills across DL and NLP makes you more versatile.

What You’ll Learn – Course Highlights

Here’s a breakdown of the kind of material covered — note that exact structure may evolve, but these themes are typical:

1. Data Science Foundations

-

Setting up your Python environment: Anaconda, virtual environments, best practices.

-

Python programming essentials: data types, control structures, functions, modules, and data structures (lists, dictionaries, sets, tuples).

-

Data manipulation and cleaning using Pandas and NumPy, exploratory data analysis (EDA), visualization using Matplotlib/Seaborn.

-

Basic statistics, probability theory, descriptive and inferential statistics relevant for data science.

2. Machine Learning

-

Supervised learning: linear regression, logistic regression, decision trees, random forests, support vector machines.

-

Unsupervised learning: clustering (K-means, hierarchical), dimensionality reduction (PCA, t-SNE).

-

Feature engineering and selection: converting raw data into model-ready features, handling categorical variables, missing data.

-

Model evaluation: train/test splits, cross-validation, performance metrics (accuracy, precision, recall, F1-score, ROC/AUC).

-

Advanced ML topics: ensemble methods, boosting (e.g., XGBoost), hyperparameter tuning.

3. Deep Learning (DL)

-

Fundamentals of neural networks: perceptron, activation functions, cost functions, forward/back-propagation.

-

Deep architectures: convolutional neural networks (CNNs) for image data, recurrent neural networks (RNNs) / LSTMs for sequence data.

-

Transfer learning and pretrained models: adapting existing networks to new tasks.

-

Deployment aspects: saving/loading models, performance considerations, perhaps integration with web or mobile (depending on the course version).

4. Natural Language Processing (NLP)

-

Text preprocessing: tokenization, stop-words, stemming/lemmatization, word embeddings.

-

Classic NLP models: Bag-of-Words, TF-IDF, sentiment analysis, topic modelling.

-

Deep NLP: sequence models, attention, transformers (BERT, GPT-style), and building simple chatbots or language-models.

-

End-to-end NLP project: from text data to cleaned dataset, to model, to evaluation and possibly deployment.

5. MLOps & Deployment (if included)

-

Building pipelines: end-to-end workflow from data ingestion to model training to deployment.

-

Deployment tools: Docker, cloud, APIs, version control.

-

Real-world projects: you may work on full workflows which combine the above domains into deployable applications.

Who Should Take This Course?

This course is ideal for:

-

Beginners with Python who want to move into the data-science/ML field and need a structured path.

-

Data analysts or programmers who know some Python and want to broaden into ML, DL and NLP.

-

Students or professionals looking to build a portfolio of projects and get ready for roles such as Data Scientist or Machine Learning Engineer.

-

Hobbyists or career-changers who want to understand how all the pieces of AI/ML systems fit together — from statistics to DL to NLP to deployment.

If you are completely new to programming, you may find some modules challenging but the course does cover foundational material. It’s beneficial if you have some familiarity with Python basics or are willing to devote time to steep learning.

How to Get the Most Out of It

-

Follow along actively: Don’t just watch videos — code alongside, type out examples, experiment with changes.

-

Do the projects: The real value comes from completing the end-to-end projects and building your own variations.

-

Extend each project: After finishing the guided version, ask: “How can I change the data? What feature could I add? Could I deploy this as a simple web app?”

-

Keep a portfolio: Store your notebooks, project code, results and maybe a short write-up of what you did and what you learned. This is critical for job applications or freelance work.

-

Balance theory and practice: While getting hands-on is essential, pay attention to the theoretical sections — understanding why algorithms work will make you a stronger practitioner.

-

Use version control: Use Git/GitHub to track your projects; this both helps your workflow and gives you a visible portfolio.

-

Supplement learning: For some advanced topics (e.g., transformers in NLP or detailed MLOps workflows), look for further resources or mini-courses to deepen.

-

Regular revision: The field moves fast — revisit earlier modules, update code for new library versions, and keep experimenting.

What You’ll Walk Away With

By completing the course you should have:

-

A solid foundation in Python, data science workflows, data manipulation and visualization.

-

Confidence to build and evaluate ML models using modern libraries.

-

Experience in deep-learning architectures and understanding of when to use them.

-

Exposure to NLP workflows and initial experience with language-based AI tasks.

-

At least several completed projects across domains (data science, ML, DL, NLP) that you can show.

-

Understanding of model deployment or at least the beginning of that path (depending on how deep the course goes).

-

Readiness to apply for roles like Data Scientist, Machine Learning Engineer, NLP Engineer or to start your own data-intensive projects.

Join Free: Complete Data Science,Machine Learning,DL,NLP Bootcamp

Conclusion

The “Complete Data Science, Machine Learning, DL, NLP Bootcamp” is a thorough and ambitious course that aims to equip learners with a wide-ranging skill set for the modern AI ecosystem. If you are ready to commit time and energy, build projects, and engage deeply, this course can serve as a central part of your learning journey into AI and data science.

Popular Posts

-

Want to use Google Gemini Advanced AI — the powerful AI tool for writing, coding, research, and more — absolutely free for 12 months ? If y...

-

📘 Introduction If you’re passionate about learning Python — one of the most powerful programming languages — you don’t need to spend a f...

-

1. The Kaggle Book: Master Data Science Competitions with Machine Learning, GenAI, and LLMs This book is a hands-on guide for anyone who w...

-

Every data scientist, analyst, and business intelligence professional needs one foundational skill above almost all others: the ability to...

-

In modern software and data work, version control is not just a technical tool — it’s a foundational skill. Whether you’re a developer, da...

-

🔍 Overview If you’ve ever searched for a rigorous and mathematically grounded introduction to data science and machine learning , then t...

-

If you're passionate about programming, AI, data, or automation — this is your lucky day ! 🎉 We’ve curated a powerful list of FREE bo...

-

🔍 What does index() do? list.index(value, start) It returns the index of the first occurrence of value, starting the search from posit...

-

Code Explanation: 1. Defining the Class class Engine: A class named Engine is defined. 2. Defining the Method start def start(self): ...

-

Code Explanation: 1. Defining a Custom Metaclass class Meta(type): Meta is a metaclass because it inherits from type. Metaclasses control ...

.jpeg)

.png)

.png)

.png)

.jpg)

%20by%20Allen%20B.%20Downey.jpg)