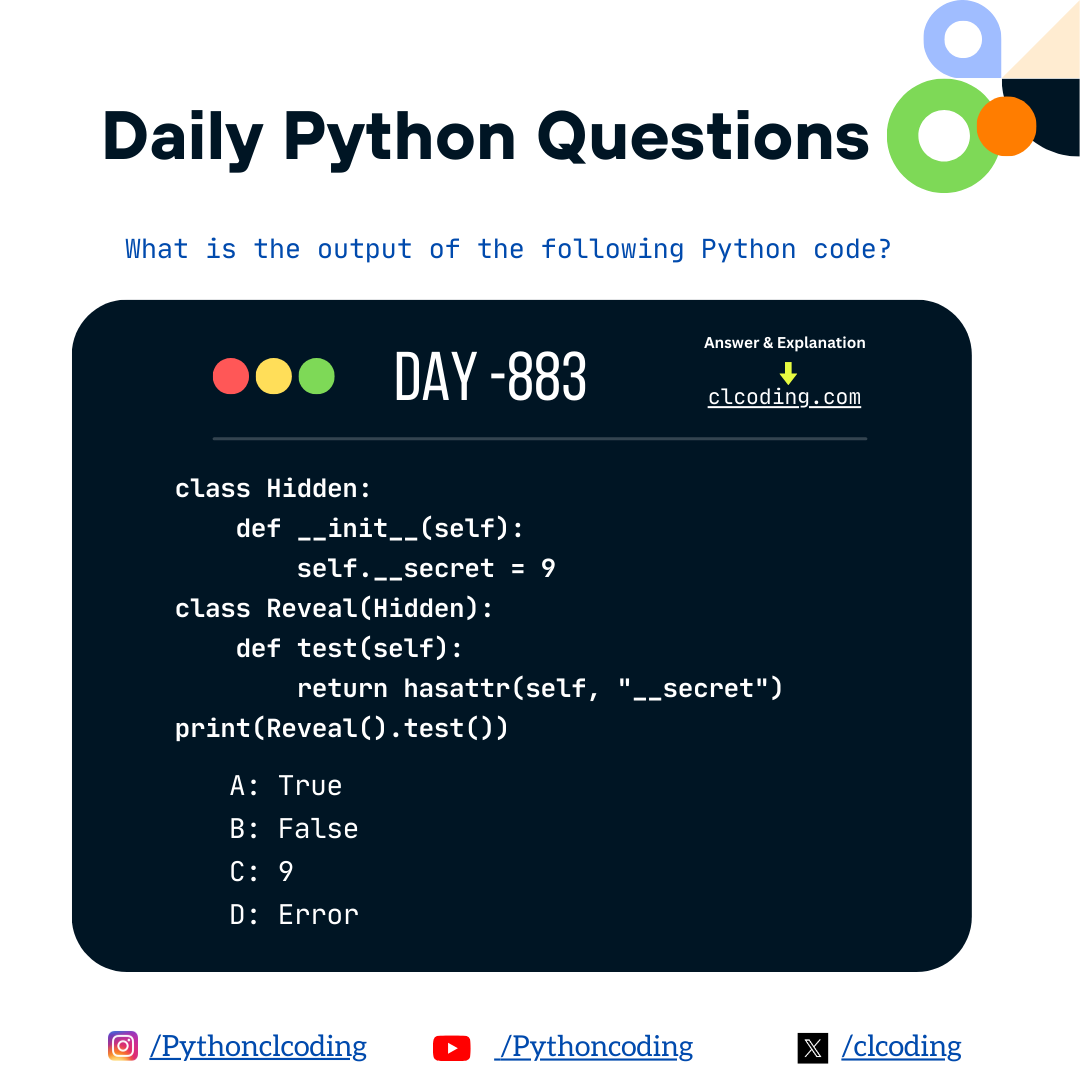

Code Explanation:

1. Class Definition

class Hidden:

A new class named Hidden is created.

This class contains a private attribute.

2. Constructor of Hidden

def __init__(self):

self.__secret = 9

__init__ is the constructor.

self.__secret creates a private variable because of the double underscore __secret.

Python mangles its name internally to _Hidden__secret.

3. Child Class Definition

class Reveal(Hidden):

Reveal is a subclass of Hidden.

It inherits methods and attributes from Hidden, including the private one (internally renamed).

4. Method in Reveal

def test(self):

return hasattr(self, "__secret")

hasattr(self, "__secret") checks if the object has an attribute named "__secret".

BUT private attributes are name-mangled, so the real attribute name is:

_Hidden__secret

Therefore, "__secret" does not exist under that name.

So the result of hasattr(...) will be False.

5. Creating Object and Printing

print(Reveal().test())

A new Reveal object is created.

.test() is called → returns False.

False is printed on the screen.

Final Output

False

.png)

.png)

%20by%20Allen%20B.%20Downey.jpg)

.png)

%20by%20Allen%20B.%20Downey.jpg)

.png)